The hardware and bandwidth for this mirror is donated by dogado GmbH, the Webhosting and Full Service-Cloud Provider. Check out our Wordpress Tutorial.

If you wish to report a bug, or if you are interested in having us mirror your free-software or open-source project, please feel free to contact us at mirror[@]dogado.de.

The aim of the prcbench package is to provide a testing

workbench for evaluating precision-recall curves under various

conditions. It contains integrated interfaces for the following five

tools. It also contains predefined test data sets.

| Tool | Language | Link |

|---|---|---|

| precrec | R | Tool web site, CRAN |

| ROCR | R | Tool web site, CRAN |

| PRROC | R | CRAN |

| AUCCalculator | Java | Tool web site |

| PerfMeas | R | CRAN |

Disclaimer: prcbench was originally

develop to help our precrec library in

order to provide fast and accurate calculations of precision-recall

curves with extra functionality.

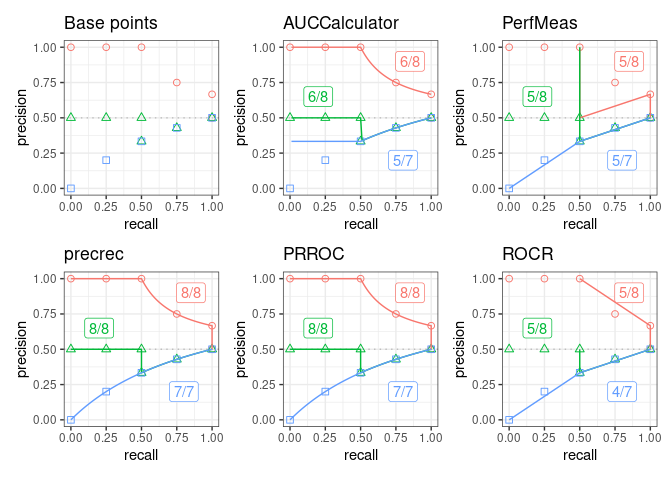

prcbench uses pre-defined test sets to help evaluate the

accuracy of precision-recall curves.

create_toolset: creates objects of different tools for

testing (5 different tools)create_testset: selects pre-defined data sets (c1, c2,

and c3)run_evalcurve: evaluates the selected tools on the

simulation dataautoplot: shows the results with ggplot2

and patchwork## Load library

library(prcbench)

## Plot base points and the result of 5 tools on pre-defined test sets (c1, c2, and c3)

toolset <- create_toolset(c("precrec", "ROCR", "AUCCalculator", "PerfMeas", "PRROC"))

testset <- create_testset("curve", c("c1", "c2", "c3"))

scores1 <- run_evalcurve(testset, toolset)

autoplot(scores1, ncol = 3, nrow = 2)

prcbench helps create simulation data to measure

computational times of creating precision-recall curves.

create_toolset: creates objects of different tools for

testingcreate_testset: creates simulation datarun_benchmark: evaluates the selected tools on the

simulation data## Load library

library(prcbench)

## Run benchmark for auc5 (5 tools) on b10 (balanced 5 positives and 5 negatives)

toolset <- create_toolset(set_names = "auc5")

testset <- create_testset("bench", "b10")

res <- run_benchmark(testset, toolset)

print(res)| testset | toolset | toolname | min | lq | mean | median | uq | max | neval |

|---|---|---|---|---|---|---|---|---|---|

| b10 | auc5 | AUCCalculator | 1.21 | 1.43 | 1.70 | 1.58 | 1.77 | 2.49 | 5 |

| b10 | auc5 | PerfMeas | 0.07 | 0.07 | 0.10 | 0.07 | 0.08 | 0.20 | 5 |

| b10 | auc5 | precrec | 4.47 | 4.52 | 4.73 | 4.75 | 4.87 | 5.04 | 5 |

| b10 | auc5 | PRROC | 0.17 | 0.18 | 0.23 | 0.18 | 0.19 | 0.44 | 5 |

| b10 | auc5 | ROCR | 1.81 | 1.81 | 1.89 | 1.82 | 1.84 | 2.16 | 5 |

Introduction

to prcbench – a package vignette that contains the descriptions of

the functions with several useful examples. View the vignette with

vignette("introduction", package = "prcbench") in

R.

Help

pages – all the functions including the S3 generics have their own

help pages with plenty of examples. View the main help page with

help(package = "prcbench") in R.

install.packages("prcbench")AUCCalculator requires a Java runtime environment (>=

6) if AUCCalculator needs to be evaluated.

You can install a development version of prcbench from

our GitHub

repository.

devtools::install_github("evalclass/prcbench")Make sure you have a working development environment.

Windows: Install Rtools (available on the CRAN website).

Mac: Install Xcode from the Mac App Store.

Linux: Install a compiler and various development libraries (details vary across different flavors of Linux).

Install devtools from CRAN with

install.packages("devtools").

Install prcbench from the GitHub repository with

devtools::install_github("evalclass/prcbench").

microbenchmark

does not work on some OSs. prcbench uses

system.time when microbenchmark is not

available.

sudo R CMD javareconfPrecrec: fast and accurate precision-recall and ROC curve calculations in R

Takaya Saito; Marc Rehmsmeier

Bioinformatics 2017; 33 (1): 145-147.

doi: 10.1093/bioinformatics/btw570

Classifier evaluation with imbalanced datasets – our web site that contains several pages with useful tips for performance evaluation on binary classifiers.

The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets – our paper that summarized potential pitfalls of ROC plots with imbalanced datasets and advantages of using precision-recall plots instead.

These binaries (installable software) and packages are in development.

They may not be fully stable and should be used with caution. We make no claims about them.

Health stats visible at Monitor.